CHAPTER 8: THE PHANTOM ROUGH CUT

The variant of the Question within the giant-screen community is “When will you have a rough cut?” People want to give input, get a sense of the film etc. I can see the disappointed look in people’s eyes when I announce “there never will be a rough cut.”

Most all films, including giant-screen films, are made by shooting as much footage as possible and editing down to a final cut. Pure CGI animation films are completely created via audio and preview animations in pre-production. With both films, you have something to look at and show very early in the filmmaking process. And after editing, you have the proverbial rough cut.

I’ve edited well over 100 rough cuts for everything from a 10-second promo to a feature film. I love rough cuts and the process of refining a fine cut. But for a rough cut you need, well, footage that you can edit. Which means choices of footage: shot A or shot B? How long should shot A be? What if we moved shot C in front of shot A? And so on.

For In Saturn’s Rings, the final render of each shot is one take at the final speed and duration of the shot. For a small minority of shots, a little bit of the head or tail might be trimmed, or other minor changes might be feasible.

The rough cutting of In Saturn’s Rings all happens while working with the still photographs, before the animation ever starts. Here, resolutions are checked, layers built, animations imagined, and then final decisions made for what stays and what goes. My “cutting room floor” is full of takes, but these are all just still images, not fully rendered takes. I only fully animate what will be final in the film. Otherwise the film would take forever. Literally.

ONLY TWO EDITS

The only editing changes in the film have already been made. I had major ankle injury in February 2014, resulting in two surgeries, one of which caused me to miss two months of work. This was the same period when we lost the first SDSS team leader and had to have the new team start from scratch.

At that point, the film’s “found-footage illustrated script” included a journey to our Moon and back, recreating the journey of Apollo 17. This was possible because of the huge amount of high-resolution footage from Apollo and the Lunar Reconnaissance Orbiter I had found. But the source of my love of space and Saturn was not Apollo. It was Titan, Saturn’s largest moon, where the Huygens lander, carried by Cassini, set down. Our first landing on an alien moon.

In 2014, Ian Regan, a key UK-based image-processing volunteer on the film, chatted with me about wealth of Titan imagery coming down from Cassini via radar, cameras, and other instruments. Knowing the film was delayed, I chose to cut the Apollo 17 sequence and replace it with Titan. This was painful, as the Apollo 17 sequence represented about nine months of the film’s work to date. But since Titan was at the heart of the film’s inspiration, and now that we have the final high-resolution imagery, it was a great call. And I found a slot to use about 30 seconds of the Moon imagery in the finale of the film.

The second edit was the original finale, the last five minutes of the film. My first concept of “Earth with Saturn’s Rings” started to appear in several YouTube clips from other people who had the same idea independently of me. I had mixed feelings about this concept even before this, since this animation required making a visual composite that was not based on science fact and blurred the “no CGI” line. Fortunately, only one month of work had been put into it.

A new finale has been written and processed that is even more powerful. But you can see why the film won’t be edited. Edits to a single shot can mean weeks, months, or even years of work.

SHOTS IN THE TRAILER

To avoid complete catastrophes in the filmmaking process, I created the “check render.” It is a simplified 3- to 10-second render using small sets of data in a simple multi-plane photo-animation to check resolution, color, etc. with a subset of photographic layers (planes) before the final go-ahead to proceed with the complete process for a shot.

Only about 20% of the material in the current trailer is final footage from the film (mostly Saturn); the rest is check renders. This will be true until the film is complete.

The final renders are built in layers — the planes of multi-plane animation. The editing decisions are made here, prior to final renders. This editing, like any editing, continues until very late in the film. The rough cuts consist of folders with thousands or, in some cases, millions of images, massive photographic planes and check renders. It’s unwatchable as film and requires a great deal of imagination to see what you are looking at.

This basic fact is the biggest challenge to the film as it’s taken great faith by people supporting the film that it is going to turn out as advertised.

CHAPTER 9: THE WORK TODAY, BUILDING THE UNIVERSE

So since early 2014, the primary and often sole task on the film has been the opening section. Although other volunteers have continued work on improving and finishing other sections, that work won’t be wrapped up until the opening section is ready to go. The rest of film is still about 90% complete but most of that work has been stalled as I’ve had to do a huge amount of unexpected work on the opening section myself.

To go from the Big Bang to the surface of the Earth using only photographs, you need a huge amount of photographic data. The first question obviously, Is there even data to pull this off at all? That was the question years ago, back in 2009–2010, when the final vision of the film came together. The answer, “no, but yes.”

No, because the entire universe has not been photographed; in fact, only the tiniest of fractions has been photographed at any resolution. And unlike Cassini, only in one direction — from Earth looking out to the edge of space. And as you go farther away, further back in time, the images are blurrier, until it’s all noise.

But yes, because as a filmmaker I know you only need to see what the “camera” sees. Just like a movie shot on a sound stage. You don’t need to build sets for every room, hallway, and hangar in the Death Star, just the ones the camera will see. All the Death Star sets in all the Star Wars films and media combined probably are .01% of the Death Star and I’m being generous.

So I researched what was out there and that formed our plan. We figured out that we had enough to pull off the shot with photographs of objects placed to scale in accurate positions. I also knew that the shot had to be “going backwards,” as our point of view in photographs is always looking from Earth outwards.

OPENING SECTION SOURCES

So where exactly do we get photographs to cover over 13 billion years of time and space?

Big Bang. This was always a stumper, as the early universe is just elemental particles — no photographs exist. But during the last five years quantum microscopes have imaged atoms and electrons, including the hydrogen atom, the building block of the universe. For a long time, I was going to take the artistic license and recreate this first using atoms, then using galaxy data and the cold inflation model. Some beautiful animations resulted (see below) but as it would be the only section of film not strictly photographic, I decided sound only until we could use real photographs only. Based on this “Sounds of the Big Bang“.

Right after the Big Bang. We have used image data from the Cosmic Background Explorer, removing the false color.

Right after the Big Bang. We have used image data from the Cosmic Background Explorer, removing the false color.

There are no photographs of the first stars, so we move too fast to see these.

Hubble and ESO sources have been used for the earliest galaxies, from 13 billion years ago.

The Sloane Digital Sky Survey (SDSS) is used for background and some foreground planes for the rest of the journey until we reach our Milky Way, and then as background for the Milky Way. Hubble, the European Southern Observatory (ESO), and others are used for high-resolution foregrounds of galaxies and nebulae.

Many sources are used for the journey from edge of the solar system to surface of Earth.

THE TEAMS

There are actually three teams working on the opening section. The Hubble/ESO high-resolution foreground object team led by Jason Harwell started work in mid-2012 and ended up with a five-person team that finished work in mid-2015. It includes an astronomer, Dr. Steve Danford, the head of the physics department at the University of North Carolina, Greensboro (in my home town), who supervised making things accurate, and is an official science advisor to the film.

The current SDSS team is led by Bill Eberly. Six others, including me, have contributed to that team. Another couple of physicists work on that team especially to help with very complex astrophysics mathematics involved.

The final team is the animation team. Well, team in this case means me. I always hoped to get animation help, but it turns out this type of animation is very hard, and there is no reason anyone else would want to learn it, since it can’t be used anywhere else. So I’m the sole animator on the film.

THE PROCESS

The process began by choosing our flight path through the universe, which is also a journey through time. We mapped out where and when in the universe all of the high-resolution sources we had available were located (allowing only those we could process in a “reasonable” amount of time). We then compared this to the areas of the Universe for which there was enough SDSS data to fill the backgrounds. It was actually a very complicated process to figure and map out in such a way that we could visualize it.

Once we had a flightpath, Jason’s High Resolution Team processed the planes of the destination galaxies and nebula along the flight path. They worked with Dr. Danford to be sure that their layers worked as accurately as possible, given our current scientific knowledge.

Bill’s SDSS team downloaded 350,000 SDSS “fields,” which are long, rectangular strips of the sky photographed by telescopes with the corresponding database data of the names, locations, and distances of the galaxies in each field — a few hundred to a few thousand each. There were several million potential galaxies in there.

So our process, in other sections, of manually cutting out the galaxies from the black of space was not an option here. Since no tools existed, we built our own. Bill’s team created code that would automatically cut out galaxies and tag each of them with the relevant location and distance data from the SDSS database. It took months to get this working right.

We then farmed out among ourselves the process of running this code on the 350,000 SDSS fields. It took several weeks, but in the end — by late 2015 — we had approximately 5.5 million individual photographs of galaxies, each tagged with its location in the sky and distance from Earth. A remarkable achievement in itself.

But as Bill and I looked at them, a very obvious and glaring problem emerged. We had several hundred thousand images of other things we didn’t want. Unfortunately, none were real alien UFOs or giant space monsters, which at least would have been newsworthy. They were stars in our own Milky Way, jet and satellite trails, and other mundane IFOs (identified flying objects). This was a bit of a punch in the stomach — we had not realized how much of this was in SDSS data.

Again we discussed the options, and the only practical one was to go through all 5.5 million photographs and remove the bad apples. It was beyond the capability of computing to write a program that could do it perfectly, or even close, and attempting it would take many months anyway. And we would still have to inspect them manually in the end – software is just not smart enough.

We considered farming the job out to volunteers, but after testing I realized that it was impossible for a number of reasons: the judgment required to decide which items to remove, the speed and capacity of the computers needed to handle folders of tens of thousands of galaxies, the need for very large monitors and controlled lighting conditions to be sure consistent decisions were made – it all meant to have any hope of ending up usable within our means, it would have to be a single person working full-time.

The only practical plan was for me stop everything and do it myself. But given how committed we were, I did not agonize over it too much. It had to be done, or give up in failure. I hoped it would take two to three months. It took six months, with two weeks off in the middle. It was the same thing every hour for every day I worked on it:

Load up a folder of SDSS cutouts. Anywhere from one to 100,000 galaxies, plus garbage, would be in the folder. Build a thumbnail view of folder in Adobe Bridge.

Load up a folder of SDSS cutouts. Anywhere from one to 100,000 galaxies, plus garbage, would be in the folder. Build a thumbnail view of folder in Adobe Bridge.

Go through all the thumbnails at a size that corresponded to the largest size they would appear on the giant screen.

Remove the local stars, jet trails, etc., without a good galaxy candidate in them.

Then go back and open photographs with a good galaxy candidate, but also containing a local star or jet trail and trim that out to leave the good galaxy candidate behind.

Backup and do the next folder.

I have to be honest. It’s the hardest thing I ever did in my life. It took intense visual cortex concentration to do it. I could only do it eight hours a day (six during the last month), six days a week (five in the last month).

I have to be honest. It’s the hardest thing I ever did in my life. It took intense visual cortex concentration to do it. I could only do it eight hours a day (six during the last month), six days a week (five in the last month).

After the first few weeks, I thought I was losing my mind: I was starting to have really strange stuff in my head from staring at tiny objects all day, listening to music. My arms, wrists, and hands were a wreck. But I realized that by doing such an intense right-brained, repetitive activity, my left brain was losing it. I found I could listen to podcasts and audiobooks without an issue; in fact, it helped tremendously. I bought a gaming mouse with programmable macros, used disability features in the operating system, and cut my mouse clicks by over 80%.

Here’s a short timelapse of typical day cleaning including running backups.

I finished intact. Well, sort of. I burst out crying when I finished. And I needed a shot in my right arm for mouse elbow. But by August 2015 we were ready to start assembly.

SDSS TILES

Of course, like any other construction project, there is always a tile job. Our background SDSS planes are massive gigapixel images with millions of galaxies. But animation software cannot handles images that size as a single image. So, as we’ve done in the rest of the film, we build very large images, take them apart to avoid memory issues, and then bring them back together again.

It took us four months to get the assembly process built, tested, and stable enough for the big run. During Thanksgiving week 2015, I fired off Bill’s tile assembly code on ten machines running custom Java code and Photoshop scripts to create the 289 tiles that will make our background universe. Each tile is 10,000 by 10,000 pixels, so it’s a huge amount of data, about 250 gigapixels of image data for this alone.

The process was slow but steady. Only a couple of crashes occurred, due to external USB drives not handling hundreds of thousands of files. The last two tiles finished two months later, January 25, 2016.

Bill and I were enormously satisfied to see how critical my six months of cleaning up was even just for the background tiles. I aimed for a 99.5% accuracy rate; 99.9% would have taken me three or four years of work; 99 percent would have taken me three or four months, but left cleanup that would have caused irreparable damage to the rendered tiles.

The 99.5% accuracy rate means just a few days of cleanup and the tiles are ready to go***.

EDIT!!!!!!!!!!!! UPDATE!!!!!!!!!!!!!!!!!!!!!!

*** this is a perfect example of how blazing a trail for the first time and predicting the future rarely if ever works. That “few days of cleanup” I wrote (this blog series was written mostly over Christmas holiday 2015) – it was three months!!! However, the tiles are looking gorgeous now – however impossible to post on the web right now due to their size but we will post them later.

NEXT STEPS

Now that we have the tiles, the next step is reassembling those tiles into very-high-resolution background planes, arranged in scientifically accurate locations. Then combine those SDSS cutouts that are close enough to our flightpath that parallax is a factor (our midground in the multi-plane). We have a created a process to place each of them in their scientifically accurate positions using the SDSS science data. Then the foreground is added, the layers of high resolution galaxies from Jason Harwell’s team, and the full multi-plane flythrough of the galaxy are ready to animate and render.

We are working RIGHT NOW on the first and most complex setup, Arp273, right now and footage is forthcoming.

LARGEST MUTIPLANE ANIMATION IN HISTORY

This will result in the largest, longest, most complex multi-plane animation in film history, by a factor of 150,000! Disney used up to seven planes in their most complex shots. In my research I have not heard of anyone doing foreground, midground, and background multi-plane animations to simulate live action using more than seven planes.

And the background tiles is nearly 8.3 Terapixels – the largest composite image processing task by a factor of ten according to Wikipedia.

We will have over 1.2 million planes in our midground, about 10,000 foreground planes, and 12 background planes. The film could be made theoretically with a physical camera, as a computer is not required for multi-plane. But our master background plane for the opening sequence would have to be 0.6 miles (1 kilometer) wide, with a camera system that could go from a few inches to 3 miles (5 kilometers) above it! Not to mention 1.2 million plates of varying sizes of massive, seamless, and perfectly transparent glass.

And I would love (not) to be the person keeping dust, birds, and low-flying aircraft out of the camera rig!

THE NARRATOR

I had been mulling over the narration issue for some time. Ideally, I wanted and designed the film to work without narration. But as the final renders rolled out and a rough assembly starting coming together, I realized relying totally on title cards was either going to result in (1) too many title cards breaking the flow or (2) people have little to no idea what they were looking at.

This was especially true of the fantastic flyover of Saturn’s moon Titan put together by the hard work and dedication of lead image processor Ian Regan. I recalled hearing Stanley Kubrick talk about narration as a tool to keep a film from becoming ungainly.

So in early 2017, I started thinking about narration. I had considered it many times before. Some readers may recall early versions of the script were heavily narrated. But I had dropped narration by 2010 versions of the script. Even though theaters and distributors desired it (and I had priced it from several A & B list actors - don’t ask, it’s ex$pensive), it had remained off the table.

But now it was back on and stayed on. I spent a lot of time researching potential people. I wanted these qualities in a narrator:

A gifted and skilled narrator with a strong body of work

A person with global. science, space and sci-fi appeal

An actor that would be open to a project from unknowns like us

A person likely to be of quality character and easy to work with

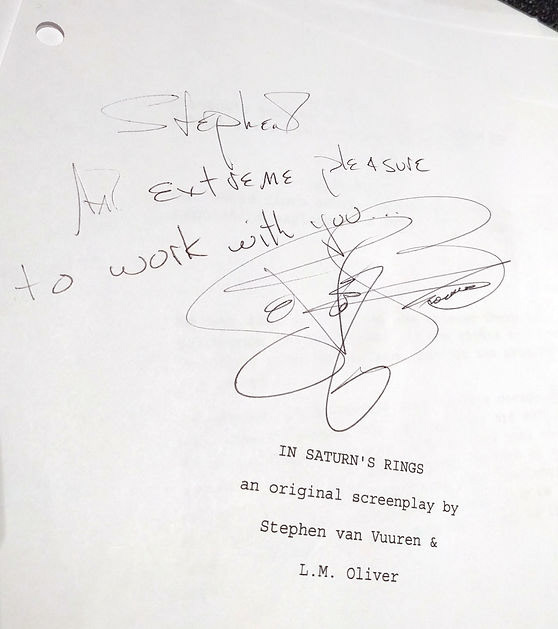

One person quickly went to the top of the list - LeVar Burton. So I started there. And long story short, I ended there. He and his agent were open to us and impressed by the project from the beginning. Negotiations were smooth and we signed a contract before 2017 was complete. And the once again amazing supporters of the film stepped up in a huge way to make sure it happened. Because of LeVar’s schedule and the film’s schedule, it had to happen fast and it did. It was truly meant to be.

All that needs to be said about the narration recording session is LeVar Burton, as an actor, as a person and as a voice artist, is everything you would want him to be.

We recorded him just in time for our first rough cut screening at a private industry event in March 2018. To be honest, the very first time I heard narration over footage I had watched for over a decade without it, it was a bit weird.

But now, after watching and watching the film many times as the film is being finished, it now seems even more bizarre without narration. LeVar Burton brings a humanity, warmth and intelligence to the film that mere title cards alone could not bring. It’s a better film with him guiding our journey.

That being said - we hope to offer some alternative versions of the film - without music, without narration for special event and live presenter versions. But it’s my feeling the best experience of In Saturn’s Rings will always be Images + Music + LeVar.

CHAPTER 10: THE HOME STRETCH

And so, we finished. Well, "finished" as in the giant screen version of the film is completed as of early May 2018. We still have to render the fulldome version of the film which will churn on computers for all of the summer and some of fall this year.

The home stretch was a much simpler, more enjoyable process as the film finally came together. Well, maybe not “simpler,” as filmmaking is always a complex, difficult process, but compared to the daunting challenges of getting multi-plane photo-animation to work at giant-screen resolution, or figuring how to build a photographic universe for the opening section, it was much more straightforward.

But one major event happened in the last months of the film's completion.Photographs from space are the true incredible journey of the human experience, allowing us to go from the beginning of time to the surface of alien moons. In Saturn’s Rings was started with the guiding principle that audiences respond completely differently to real photographs than they do to computer-generated images. The online clips and test footage screenings over the past few years have proven this time and time again.

It is the reason supporters and volunteers have dedicated themselves and committed to working on the film over such a long time, with little to show. We shared a passion that these photographs need to be seen and only the giant screen can present them with their true sense of scale, with galaxies, planets, and moons completely filling your field of view.

WOULD I DO IT AGAIN?

This is a question I get nearly every time I make a public presentation on the film. The answer is both simple and not so simple.

Yes and no.

If I knew at back in 2004, or even in 2007, what I know today of what effort and resources the film would require, I would have passed on the project, as so many others have. Quite frankly, it’s a lifetime’s worth of work, without the prospect of making money, while taking almost every penny you own and more than a decade out of your life for a 40 minute movie.

If I knew at back in 2004, or even in 2007, what I know today of what effort and resources the film would require, I would have passed on the project, as so many others have. Quite frankly, it’s a lifetime’s worth of work, without the prospect of making money, while taking almost every penny you own and more than a decade out of your life for a 40 minute movie.

But if I knew then what I know now about the impact the film has already had on everyone it has touched and what it will look like — absolutely yes.

I can’t speak to how good or important In Saturn’s Rings will be. But I do know it is the most impactful, life-changing and profound project of my life. I spent 9 months looking all day, every day, at over five million galaxies as just one part of this journey. Two key volunteers on the film have been in part inspired to change their lives and careers by working on the content of the film: one went from being a Web designer to acclaimed painter, the other from pharma lab geek to JPL Titan scientist.

And volunteers, backers, and fans constantly report on how spending time with photographs from space has changed them forever as people. So I have no doubt that this film has been more than worth it before it’s ever done. It truly is a journey, and not a destination.

Barring a UFO invasion, giant asteroid strike, or other calamity that kills me, my computers, and the key volunteers, the premiere of In Saturn’s Rings happens May 4th 2018. But that is just another step on the journey that is In Saturn’s Rings. The fulldome version will come in the fall of 2018.

"We shall not cease from exploration, and at the end of all our exploring will be to arrive where we started and know the place for the first time." - T.S. Eliot

- Stephen van Vuuren

TECHNICAL SUMMARY (for the geeks)

The film will has about 7.5 million photographs from telescopes, time-lapse sources, spacecraft, and historical sources that have been manually touched by image processors on the film.

Roughly 50 million possible photographs have been considered over the course of the film as potential sources but rejected due to content, resolution, or suitability for a cohesive journey.

To date, over 30 computers have been used for the film, up to 21 at one time, with 19 currently in my basement and two at image processors.

Approximately 1 terabyte of RAM, 150 processing cores, and almost two-thirds of a petabyte of computing resources have been used.

The film will contain imagery from Cassini-Huygens, Solar Dynamics Observatory, Sloan Digital Sky Survey, European Southern Observatory, Voyagers 1 and 2, Vikings 1 and 2, Dawn, New Horizons, Rosetta, Venus Express, Lunar Reconnaissance Orbiter, Hubble Space Telescope, Spitzer, HiRES, Apollos 8, 10, 11, 12, and 17, International Space Station, and several Space Shuttle missions, plus more than 25 Earth-based astro-photographers. Additionally, over 100 historical and public domain image archives and Google image searches have been sourced for additional photographs.

Over 1,000 individual donors have contributed nearly $265,000 over ten years to support the film. No corporation or organization has donated cash to the film, except for a $750 grant from the Arts Council of Greensboro, NC.

Over 100 volunteers from more than 25 countries have worked on the film.